Six Areas to monitor to improve Your Vulnerability Management Programme

All ready-to-use KPIs you need to start effective monitoring and continuous improvement.

In my last post “Follow the trendlines, not the headlines”, I discussed 5 large-scale statistics that I think should be always kept in mind to look at the challenges related to vulnerability management keeping an eye open on how things globally go.

I will now take a further step toward the actual implementation of a vulnerability management program, entering into details of what every single company should look at.

There is not a single process in a company, neither within nor outside the IT & cybersecurity functions, which can be barely improved without a well-identified set of KPIs that are regularly monitored, analyzed, and eventually modified and extended adjusting continuously the process.

The vulnerability management process makes, of course, no exception to this.

But once we set out the vulnerability management policy, which are the KPIs that convey the most insightful information regarding how the vulnerability management process is going?

While discussing these KPIs, I will try to provide two useful contributions. The first one is to provide a list of ready-to-use KPIs that allow to measurement of the program. The second is to provide insight into all the factors that may have an impact on such KPIs, with the intent to support evaluating them correctly.

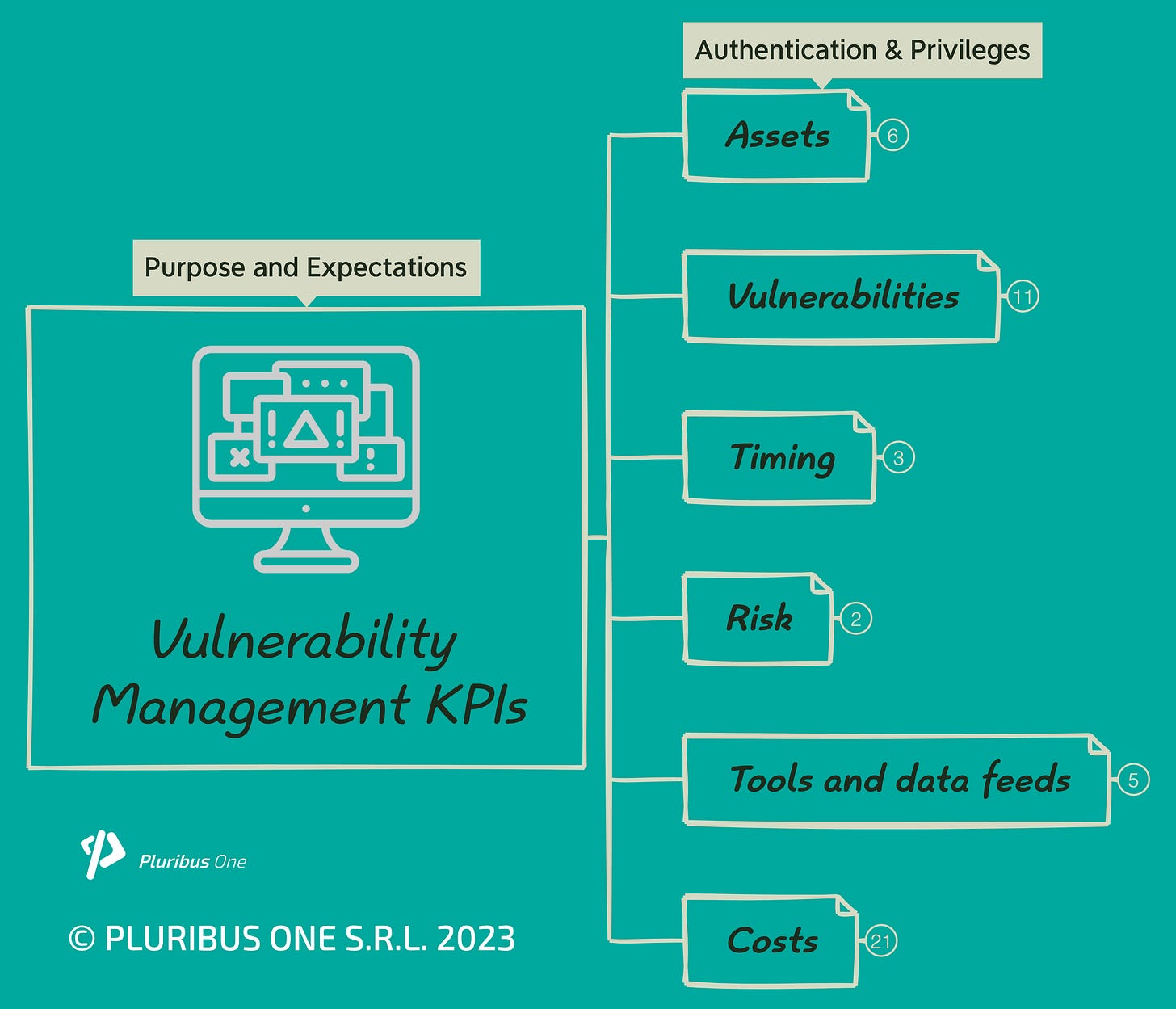

To help face the problem organically, I have decided to group the KPIs in 6 different areas (Assets, Vulnerabilities, Timing, Risk, Tools and data Feeds, Costs), with the intent to identify different perspectives according to which to look at the problem and within each area several measurements which can help represent and measure it properly.

The area is the one related to Assets. Having an exhaustive inventory of all the assets to protect is the fundamental requirement for every cybersecurity analysis or program.

The number of vulnerabilities is primarily a function of the number of assets we look at: in principle, the higher the number of assets, the higher the number of issues we may find. As such assets can be quite heterogeneous (e.g. user hosts & laptops, servers, web applications & services, databases, firewalls, switches, etc.) the overall amount can be split by counting the total number per asset category.

Assets can be further qualified also considering their complexity: web applications consisting of a small number of pages and featuring a limited number of functionalities will reasonably consist of a more limited number of lines of code and will use fewer third-party components, so we could reasonably expect to be less exposed than a large and complex application. Thus, upon needs, we might consider including weighting mechanisms for the assets that allow us to evaluate the complexity of the assets besides the category they belong to to have a more realistic representation of the actual situation.

As a further way to look at these assets correctly, we have not to forget that not authenticated and authenticated assessments have, of course, different perimeters and bring different results. And that in the case of authenticated assessments, the perimeter further changes depending on the level of privilege. So, a precise representation of the assets should tell if we are assessing them according to all the possible levels of authentication and authorization or not.

Finally, besides the overall numbers of the assets monitored, it is also fundamental to measure which percentage of the assets within our control we are assessing.

The second set of indicators is those related to Vulnerabilities. The number of discovered vulnerabilities is the primary indicator. It depends on one side on the numbers and attributes of the assets, and the other on how good our capabilities are: primarily, the number and quality of the VA tools and skills of the people running the assessment. We will take into account these in a separate area, but it is important here to remark on the correlation and be aware that such factors can make a great difference. Vulnerabilities are not equal, and to prioritize mitigation correctly we should at least consider their severity, and the relevance of the system affected (and thus the possible impacts of the attack). Measures like the likelihood of exploitation (e.g. the value that could be returned by the EPSS score or scores like the VPR score provided by TENABLE products) while fundamental for an effective risk mitigation strategy in my view result more suitable to operationalize patching and remediation and I would find it improper (also because of the lack of control we have on them) to include them as KPIs for the vulnerability management program.

Instead, I consider one of the cornerstones of an evaluation process to measure our capability to remediate vulnerabilities (number of patched vulnerabilities) within a given amount of time. How long such an amount of time should depend on the scale of our internal processes (we can scale from weeks to months depending on the kind of analysis we want to make). Similar to what happens for discovery, again it would be useful to split the overall number into smaller pieces, considering in addition to the technical severity of the vulnerability and of the relevance of the systems affected also how complex is to apply the recommended patch or mitigation. Applying a complex patch on a critical system may require a lot of effort and will draw a lot of resources from the team and thus reasoning in terms of pure numbers can provide distorted information. It is a notion of overall “cost” per vulnerability managed for which we have explicitly introduced a group of KPIs useful to determine it.

Finally, among the metrics we should consider some to measure not only what we have done but also what we haven’t done, which is represented by the number of open vulnerabilities over time. One might think it should be ideally zero, but indeed it hasn’t really to be since besides vulnerabilities we had not the time to patch, it might also include vulnerabilities that we deliberately decided not to patch. As discussed in my previous post data from CISCO and CYENTIA Institute tell us that companies on average can patch 15% of the discovered vulnerabilities.

Moving forward, “Timing” with which activities are carried out is another dimension to consider. In this area, we shall first measure how much time it takes us to discover we are affected (time to detect) and, thereinafter, the time to patch which measures the elapsed time between when we keep track of the vulnerability affecting our systems and when the vulnerability is fully patched. Globally, the two timings together provide us an overall indication of the window of exposure for our organization (as reported in my previous post it is generally around 30 days). A too-high time to detect might be addressed by working on our vulnerability sources and on the frequency with which we run the assessments. The time to patch is instead much more about the efficiency of our internal processes and the resources (staff, primarily) we have available to deploy patches.

Two other areas to consider are that of Tools and data feeds and that of Risk. The first one helps us to keep track of the technical solutions that we are using to achieve the result. In this area, it is advisable to track at least the Coverage of the vulnerability management sources (which if not good might hurt the time to detect) and of the scope (e.g. network scanners vs. web app scanners) and quality of the VA tools. With quality, I mean both which kind of vulnerabilities (CVEs) or weaknesses (CWEs) a tool is good at discovery but also, which is slightly less easy to evaluate, how effective the implementation of the detection algorithms built-in in the scanners.We can nevertheless move such kind of evaluation and KPI to a later stage when our vulnerability management program has already reached a good degree of maturity.

An overall Risk evaluation is useful to provide a comprehensive representation of how in practice we are exposed (or are supposed to be) against the other KPIs we are considering. To do it, we can easily keep track of the number of incidents due to known vulnerabilities, and introduce a risk exposure measurement over time. Here, we can consider using one or more indicators, like CVSS for the technical severity or the EPSS for the likelihood of exploitation to have a concise and aggregated view of what's going on for each asset category.

Finally, let’s have a look at the Costs. There are many sources of cost we can consider while evaluating the vulnerability management program, the most obvious being monetary costs related to Personnel (not only salaries but also training costs), Software licenses and Hardware, and for instance Subscriptions for data feeds (which I put in the Operational Costs category). When a vulnerability is exploited, we incur Incident Response costs (e.g. costs for the investigation of the incident, costs for recovery and restoring of the systems, or additional staff or suppliers who might ask for support to handle the incident) as well as those of an unforeseen downtime of the systems. Opportunity Costs are represented in terms of (a scheduled) downtime (if patching requires downtime, like in the case of an e-commerce platform that goes offline for patching, there's a potential loss in sales) and resource allocation (both personnel and infrastructure) dedicated to vulnerability management that might be pulled away from other valuable projects.

Finally, other sources of costs we can consider (I put them generically under Miscellaneous), are those for Audits and Compliance (ensuring that vulnerability management processes align with industry regulations may require audits, which come with associated costs), those of Communication Tools (platforms or tools used for internal communication about vulnerabilities, patching schedules, and associated risks) and the Cost of Inaction. This latter whilst not being a direct cost of vulnerability management, represents the potential cost of not investing adequately in this area. The repercussions of a significant breach, both financial and reputational, can far exceed the costs of a well-structured vulnerability management program.

To summarize, we have listed in this post all the possible KPIs to monitor and improve a vulnerability management program. KPIs are grouped into six areas and include both basic metric (bare numbers) as well as less direct KPIs which increase the level of detail and knowledge of the program. Putting such KPIs in the context of our organization, measuring and revising them regularly, is by far the best way to adapt and modify them to our specific needs.

References

Common Vulnerability Scoring System - https://www.first.org/cvss/

Exploit Prediction Scoring System - https://www.first.org/epss/