Why isn’t CVSS a Good indicator for a Vulnerability Management Program?

Vulnerability management is one of the cornerstones of a cyber risk mitigation strategy.

As vulnerabilities regularly occur in our systems and applications, we have to patch them to prevent them being exploited. And since the number of published vulnerabilities grows every year, having a good strategy to prioritise vulnerabilities within this strategy is nowadays more important than ever. As Richard Rummelt wrote in his bestseller "Good Strategy Bad Strategy”, FOCUS is the key to designing a good strategy (or as Steve Jobs would have said it “Strategy is about saying no”, focusing on what is really important).

As of the end of May 2023, we count about 12,000 published vulnerabilities, approximately 2,000 more than in the same period of 2022. Which number was, incidentally, of about 2,500 units higher than in 2021. Independently from the reasons for this increase what has a real impact on us is that this never stopping trend eats an increasing amount of the cybersecurity teams' time.

But what it means to focus on a vulnerability management program?

Metrics do exist to evaluate and measure the effectiveness of a vulnerability management program, but let’s try to explain them here more intuitively.

Ideally, if we had a crystal ball we could decide to focus only on those vulnerabilities which we are 100% sure will be exploited in our environment, leaving the others (for which we are similarly 100% sure they won’t be exploited) completely unpatched.

Yes, unpatched.

We are here to mitigate risks, and if we are 100% sure that a vulnerability won’t never get exploited, leaving it unpatched won’t have any negative impact on our exposure. Instead, it will have a positive one on the time saved managing the vulnerability.

Do we have such a crystal ball?

Every time a vulnerability gets published all of us hurry up to check two things in the bulletins just published. One is which are the systems and versions affected by the vulnerability (we need to know promptly if we are somehow impacted). And thereinafter the CVSS score associated with the vulnerability. This to understand how severe the problem is and, typically, then to decide whether to patch or not.

But is the CVSS score a useful criterion for this?

CVSS is a measure of the severity of a vulnerability. I would stress the word severity, which according to the Cambridge dictionary is a measure of seriousness, painfulness, difficulty, or unpleasantness.

But it’s indeed not a measure of likelihood. So what can be wrong with that? Let’s try to see it with a practical example.

As FIRST indicates, coverage and effectiveness are the two metrics that can measure the quality of a vulnerability management strategy.

Said simply, coverage measures the capability of the strategy to address (and patch/mitigate) all the vulnerabilities which are actually being exploited. 100% of coverage means that we are not leaving unpatched any vulnerability which can be actually exploited. On the other side, the effectiveness measures the capability to concentrate only on those vulnerabilities which get actually exploited, without wasting time with unexploited vulnerabilities (remember, we are here to reduce the risk).

How does a criterion based on the CVSS score behave in terms of coverage and effectiveness?

CVSS-based mitigation criteria simply impose to patch all the vulnerabilities which have a score higher than a certain threshold. While managing a vulnerability management program, we are free set a threshold on the CVSS score. Or, if we are subject to some compliance program like the PCI-DSS a threshold will be defined by the standard itself. For instance, here is the excerpt of the PCI-DSS which defines the threshold:

“To demonstrate compliance, internal scans must not contain high-risk vulnerabilities in any component in the cardholder data environment. For external scans, none of those components may contain any vulnerability that has been assigned a Common Vulnerability Scoring System (CVSS) base score equal to or higher than 4.0.”

Does this ensure high efficiency and coverage?

Let's analyze it with a graphical example.

Let’s assume that:

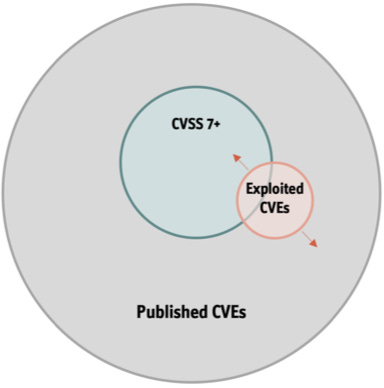

The grey circle represents all the Published CVEs;

The green circle represents all the CVEs that have a base score >=7.

The question is where is the red circle representing the exploited CVEs placed with respect to the other two?

The first possible situation is that only vulnerabilities with a CVSS score higher than a given threshold are exploited. In the picture, we could assume that the red circle represents all the CVEs with a base score higher than, let’s say, 8.6. This would be a kind of ideal scenario, where if we are able to identify the right value of the threshold (that is the CVSS value above which the vulnerabilities are actually exploited) it would be possible to achieve 100% coverage and 100% efficiency. In a situation like the one represented in the picture, coverage would be still 100%, whilst efficiency would be lower since we would be patching vulnerabilities that are not actually exploited.

A second possible scenario is the one where all the vulnerabilities actually exploited are below a given CVSS threshold. This would mean that patching based on the CVSS value would lead us to patch only vulnerabilities which are not actually exploited (0% efficiency) whilst all the exploited vulnerabilities would remain completely unpatched (0% coverage). This would mean that we have in practice only wasted our time without reducing the risk. Even if this doesn't happen in practice, there is not any guarantee in this respect provided by the CVSS scoring.

What most frequently happen is a scenario where neither efficiency nor coverage is 100% because only a fraction of the exploited vulnerabilities is by chance above the CVSS. I would stress the word "by chance" here since, again, there is not any guarantee provided by CVSS in this respect (and neither was the CVSS designed to provide one).

Just to have some real numbers, as of April 20th, 2023, among the 9,692 vulnerabilities published in 2023, only 1,050 had a CVSS <=4. This would mean that based on the PCI-DSS prescriptions, we would have to address approximately the 90% of the published vulnerabilities, paying for sure a very high price in terms of efficiency.

Because of these numbers the EPSS (Exploit Prediction Scoring System) was introduced some years ago, which turns out to be much more effective in prioritising vulnerabilities. But we will address it in a separate blogpost.

In the meanwhile, for those of you interested in some more real numbers, I would recommend you the FIRST page on the EPSS model (https://www.first.org/epss/model) that has inspired me to write this post and provides some insightful metrics and comparisons among CVSS and the EPSS scoring system v1, v2, and v3.

[1] https://www.first.org/epss/model